While hunting for a data set to try my DTM python port, I came across this paper, and this repository. The paper itself was quite an interesting read and analysed trends of topics in the European Parliament, but what caught my attention was the algorithm they used to perform this analysis – what they called the Dynamic Non-Negative Matrix Factorisation (NMF).

The reason for my interest in it was obvious, because the authors claimed it to be a method to identify Dynamic Topics in a corpus – the exact same thing I am attempting to do.

The GitHub page mentions their steps to run their algorithm and to clean the data [the dataset on the page is not the same as described in the paper – it is instead of news transcripts split into Entertainment, Business, Politics, Technology and Football spanning over 3 months]. I decided to clean the data myself, and store it in the Blei-LDA format – it becomes easier to use it for my DTM code that way if I want to. Cleaning involved the usual – splitting up each document line by line and then word by word, removing stop words, and using the handy Gensim corpora class to store it to disk in the Blei format. The authors of the paper have described their own way of cleaning (by running the scripts on their repo), so you can do that as well.

Soon after getting my corpus ready, I sat down to figure out how exactly the Dynamic NMF works – only to realise it is quite, quite different from the Dynamic Topic Model. While the idea of identifying the evolution of topics over time, the approach was quite different. Before getting into it, if you aren’t sure what LDA or DTM is, have a look at this and the resources there to refresh your memory/understand it.

So in Blei’s DTM, the hyperparameters of the previous time-slice influence the ones on the next – that is to say, the words in the topics of an older time slice directly affect the words of the topic in the next time-slice. In Dynamic NMF, the idea is to ‘discover’ what the authors call dynamic topics.

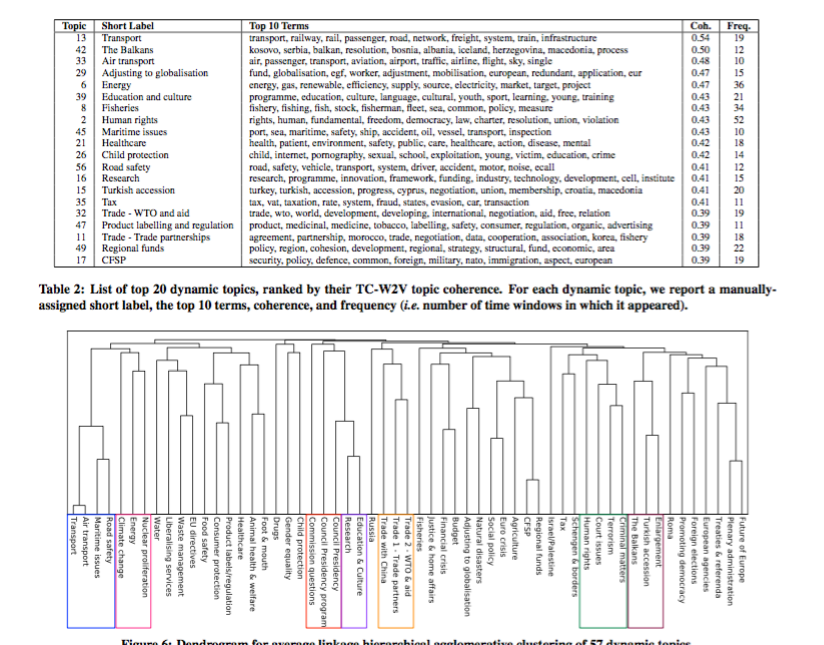

They do this in 2 steps – first, by separating the corpus into it’s time-slices or windows. The NMF algorithm is then run on each of these windows, and we get topics for each time-slice. Then, another matrix is constructed which contains the top terms from each matrix as it’s columns. In the second step the NMF algorithm is applied again, with the number of topics decided after applying a W2V Coherence measure described in the paper. I’ve diluted the exact approach to make it more palatable – I would highly recommend going through the ‘Methods’ section of the paper and go through it yourself.

What the above means is that there isn’t an identification of topics from the very start – rather, after finding a certain number of topics in each time-slice, the ones which are semantically similar are grouped together as ‘dynamic topics’. This is quite different from the original DTM, where topics are fixed from the start and evolve in each time-slice. We can also see a similar evolution in NMF, but it’s a little ‘looser’. The words in DTM tend to not change so rapidly in each time-slice.

Both are effective methods but have different approaches and give slightly different results. I’ll try and do a blog post next week comparing the results of both on the same corpus – and hopefully with my DTM python wrapper!

RARE Technologies

RARE Technologies