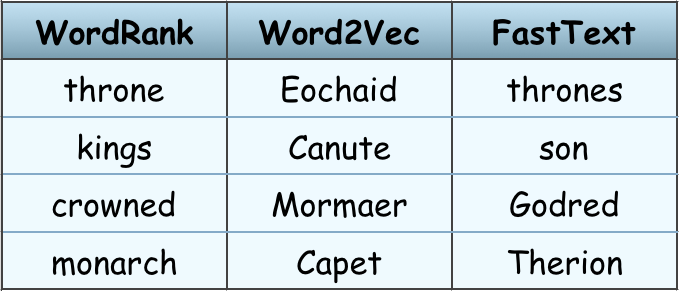

Comparisons to Word2Vec and FastText with TensorBoard visualizations. With various embedding models coming up recently, it could be a difficult task to choose one. Should you simply go with the ones widely used in NLP community such as Word2Vec, or is it possible that some other model could be more accurate for your use case? There are some evaluation metrics …

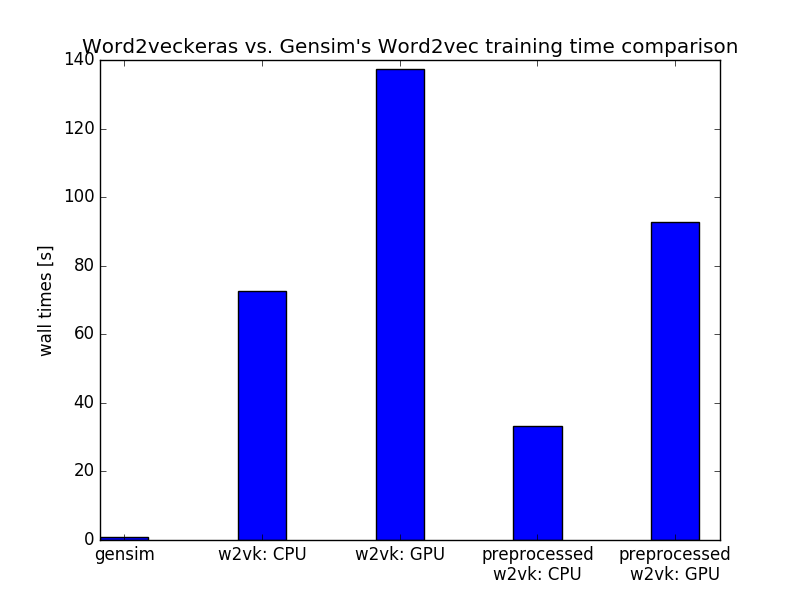

Gensim word2vec on CPU faster than Word2veckeras on GPU (Incubator Student Blog)

Word2Vec became so popular mainly thanks to huge improvements in training speed producing high-quality words vectors of much higher dimensionality compared to then widely used neural network language models. Word2Vec is an unsupervised method that can process potentially huge amounts of data without the need for manual labeling. There is really no limit to size of a dataset that can …

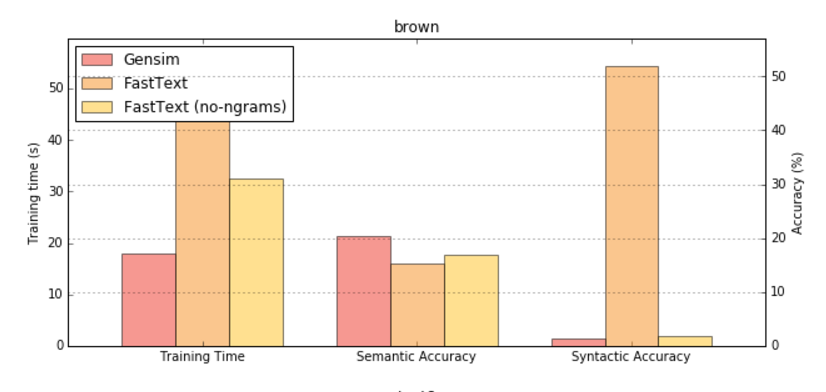

FastText and Gensim word embeddings

Facebook Research open sourced a great project recently – fastText, a fast (no surprise) and effective method to learn word representations and perform text classification. I was curious about comparing these embeddings to other commonly used embeddings, so word2vec seemed like the obvious choice, especially considering fastText embeddings are an extension of word2vec. The main goal of the Fast Text …

RARE Technologies

RARE Technologies