Docstrings in open source Python

Gensim Survey 2018

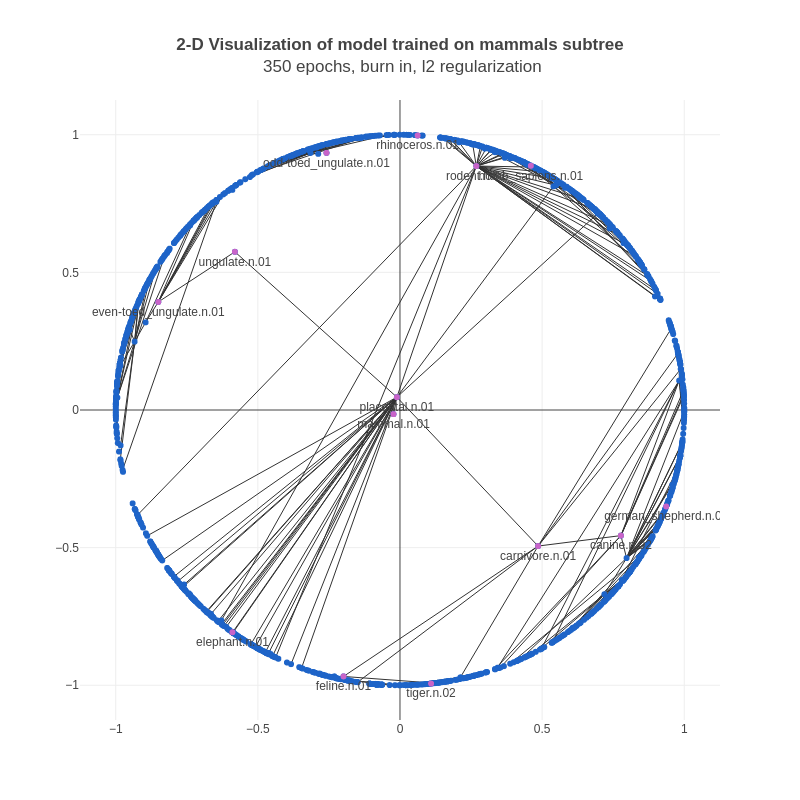

Implementing Poincaré Embeddings

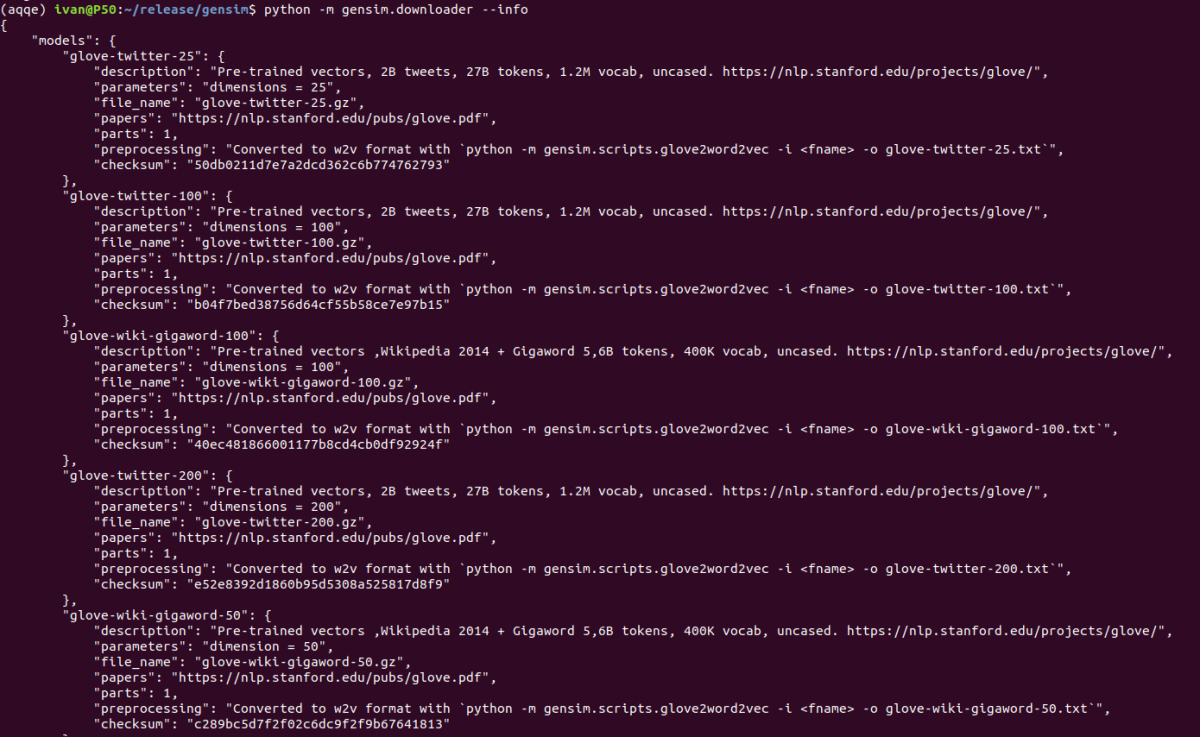

New download API for pretrained NLP models and datasets in Gensim

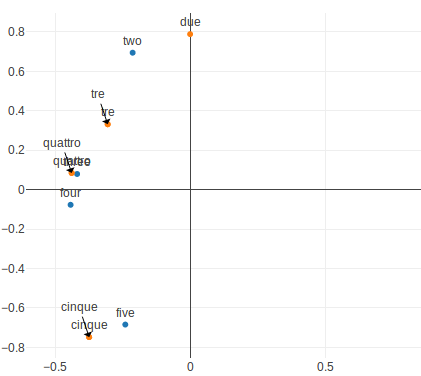

Translation Matrix: how to connect “embeddings” in different languages?

Chinmaya’s GSoC 2017 Summary: Integration with sklearn & Keras and implementing fastText

This blog summarizes the work that I did for Google Summer of Code 2017 with Gensim. My work during the summer was divided into two parts: integrating Gensim with scikit-learn & Keras and adding a Python implementation of fastText model to Gensim. Gensim integration with scikit-learn and Keras Gensim is a topic modelling and information extraction library which mainly serves unsupervised …

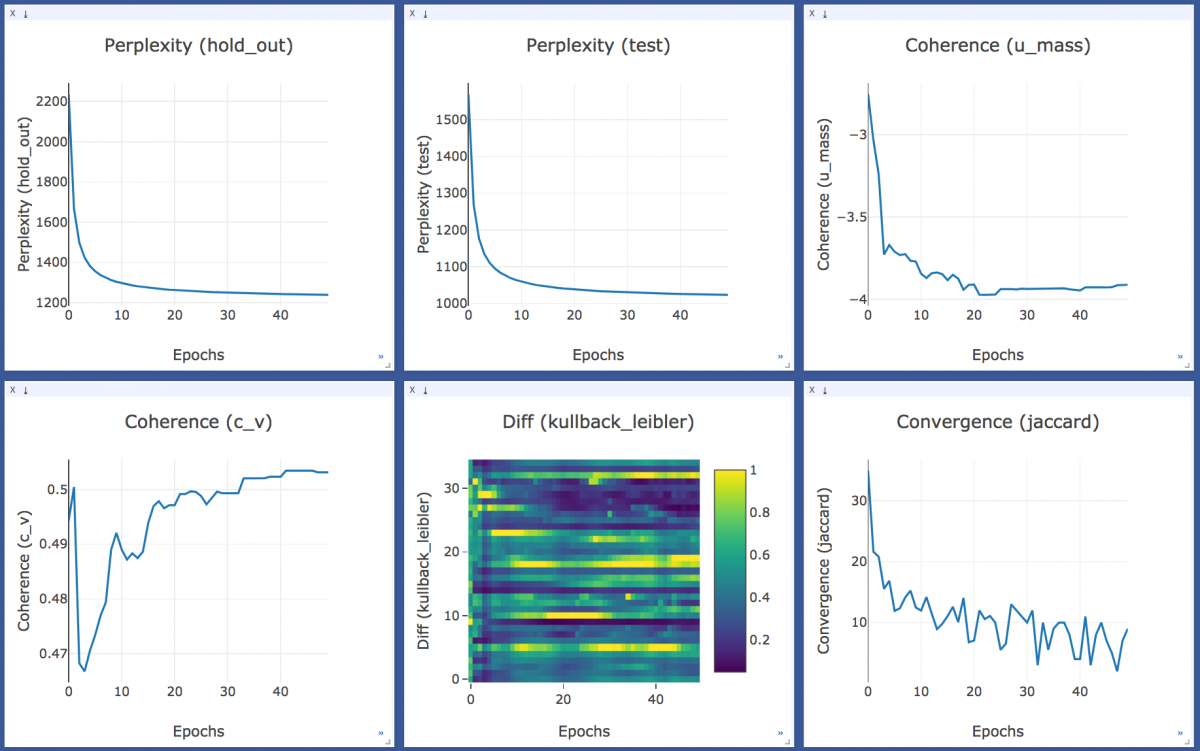

Parul’s GSoC 2017 summary: Training and Topic visualizations in gensim

This blog post summarizes my work done during the Google Summer of Code 2017. My task was to implement topic modeling visualizations which could help users to interactively analyze their topic models and get the best out of their data. I worked on adding two types of visualizations: 1. To monitor the training process of LDA with the help of …

Chinmaya’s Google Summer of Code 2017 Live-Blog : a Chronicle of Integrating Gensim with scikit-learn and Keras

2nd September, 2017 The final blogpost in the GSoC 2017 series summarising all the work that I did this summer can be found here. 15st August, 2017 During the last two weeks, I had been working primarily on adding a Python implementation of Facebook Research’s Fasttext model to Gensim. I was also simultaneously working on completing the tasks left for adding scikit-learn API for …

Parul’s Google Summer of Code 2017 Live-Blog : a chronicle of adding training and topic visualizations in gensim

19th August 2017 For last phase of my project, i’ll be adding a visualization which is an attempt to overcome some of the limitations of already available topic model visualizations. Current visualizations focus more on topics or topic-term relations leaving out the scope to comprehensively explore the document entity. I’d work on an interface which would allow us to interactively …

RARE Technologies

RARE Technologies