If you’ve learned how to train topic models in Gensim, but aren’t able to get satisfying results, then we have a new tutorial that will help you get on the right track on GitHub. Primarily, you will learn some things about pre-processing text data for the LDA model. You will also get some tips about how to set the parameters …

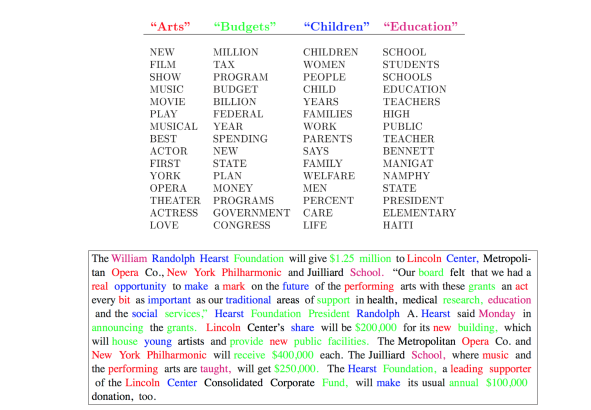

Topic Modelling and Coloring Document Words

My second Google Summer of Code blog post is going to be a wee bit more technical – I’m going to briefly describe what topic models do, before linking to a tutorial I wrote which will teach you how to do some cool stuff with Topic Models and gensim. Very, very briefly – given a collection of documents, topic models …

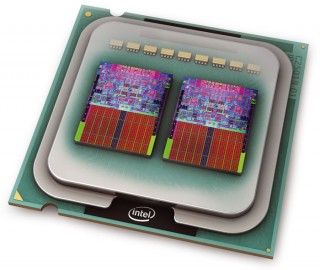

Multicore LDA in Python: from over-night to over-lunch

Latent Dirichlet Allocation (LDA), one of the most used modules in gensim, has received a major performance revamp recently. Using all your machine cores at once now, chances are the new LdaMulticore class is limited by the speed you can feed it input data. Make sure your CPU fans are in working order!

Tutorial on Mallet in Python

MALLET, “MAchine Learning for LanguagE Toolkit” is a brilliant software tool. Unlike gensim, “topic modelling for humans”, which uses Python, MALLET is written in Java and spells “topic modeling” with a single “l”. Dandy.

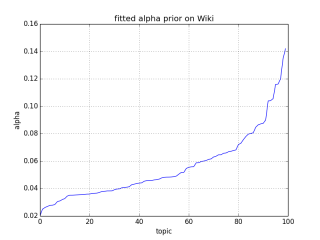

Asymmetric LDA Priors, Christmas Edition

The end of the year is proving crazy busy as usual, but gensim acquired a cool new feature that I just had to blog about. Ben Trahan sent a patch that allows automatic tuning of Latent Dirichlet Allocation (LDA) hyperparameters in gensim. This means that an optimal, asymmetric alpha can now be trained directly from your data.

RARE Technologies

RARE Technologies