Racing through 2016 with so much on the front burner and yet it is timely to pause for a quick update on the launch of my new machine learning company, RaRe Technologies. The Start of Something Exciting I’ve heard from a few people who were confused when they received a recent newsletter from “RaRe Technologies”, when they signed up for …

Go, Games, Strategy and Life: The Big Picture

Making sense of word2vec

One year ago, Tomáš Mikolov (together with his colleagues at Google) made some ripples by releasing word2vec, an unsupervised algorithm for learning the meaning behind words. In this blog post, I’ll evaluate some extensions that have appeared over the past year, including GloVe and matrix factorization via SVD.

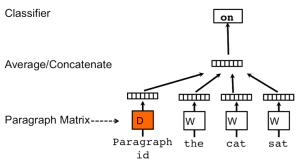

Doc2vec tutorial

The latest gensim release of 0.10.3 has a new class named Doc2Vec. All credit for this class, which is an implementation of Quoc Le & Tomáš Mikolov: “Distributed Representations of Sentences and Documents”, as well as for this tutorial, goes to the illustrious Tim Emerick. Doc2vec (aka paragraph2vec, aka sentence embeddings) modifies the word2vec algorithm to unsupervised learning of continuous …

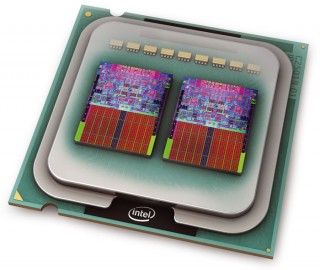

Multicore LDA in Python: from over-night to over-lunch

Latent Dirichlet Allocation (LDA), one of the most used modules in gensim, has received a major performance revamp recently. Using all your machine cores at once now, chances are the new LdaMulticore class is limited by the speed you can feed it input data. Make sure your CPU fans are in working order!

Data streaming in Python: generators, iterators, iterables

There are tools and concepts in computing that are very powerful but potentially confusing even to advanced users. One such concept is data streaming (aka lazy evaluation), which can be realized neatly and natively in Python. Do you know when and how to use generators, iterators and iterables?

Tutorial on Mallet in Python

MALLET, “MAchine Learning for LanguagE Toolkit” is a brilliant software tool. Unlike gensim, “topic modelling for humans”, which uses Python, MALLET is written in Java and spells “topic modeling” with a single “l”. Dandy.

Word2vec Tutorial

Performance Shootout of Nearest Neighbours: Querying

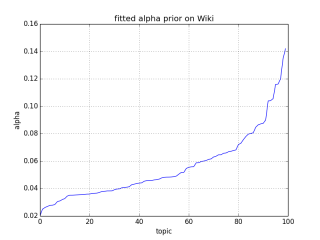

Asymmetric LDA Priors, Christmas Edition

The end of the year is proving crazy busy as usual, but gensim acquired a cool new feature that I just had to blog about. Ben Trahan sent a patch that allows automatic tuning of Latent Dirichlet Allocation (LDA) hyperparameters in gensim. This means that an optimal, asymmetric alpha can now be trained directly from your data.

RARE Technologies

RARE Technologies