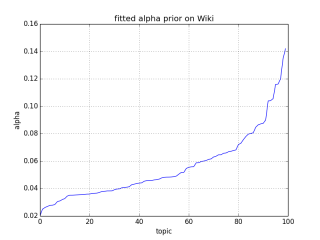

Asymmetric LDA Priors, Christmas Edition

The end of the year is proving crazy busy as usual, but gensim acquired a cool new feature that I just had to blog about. Ben Trahan sent a patch that allows automatic tuning of Latent Dirichlet Allocation (LDA) hyperparameters in gensim. This means that an optimal, asymmetric alpha can now be trained directly from your data.

Performance Shootout of Nearest Neighbours: Contestants

Performance Shootout of Nearest Neighbours: Intro

Parallelizing word2vec in Python

The final instalment on optimizing word2vec in Python: how to make use of multicore machines. You may want to read Part One and Part Two first.

Word2vec in Python, Part Two: Optimizing

Last weekend, I ported Google’s word2vec into Python. The result was a clean, concise and readable code that plays well with other Python NLP packages. One problem remained: the performance was 20x slower than the original C code, even after all the obvious NumPy optimizations.

Deep learning with word2vec and gensim

Neural networks have been a bit of a punching bag historically: neither particularly fast, nor robust or accurate, nor open to introspection by humans curious to gain insights from them. But things have been changing lately, with deep learning becoming a hot topic in academia with spectacular results. I decided to check out one deep learning algorithm via gensim.

- Page 2 of 2

- 1

- 2

RARE Technologies

RARE Technologies