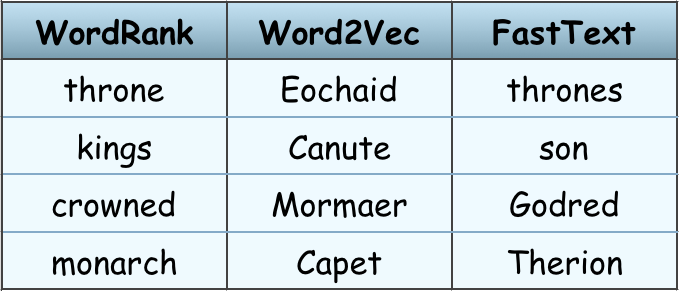

WordRank embedding: “crowned” is most similar to “king”, not word2vec’s “Canute”

Comparisons to Word2Vec and FastText with TensorBoard visualizations. With various embedding models coming up recently, it could be a difficult task to choose one. Should you simply go with the ones widely used in NLP community such as Word2Vec, or is it possible that some other model could be more accurate for your use case? There are some evaluation metrics …

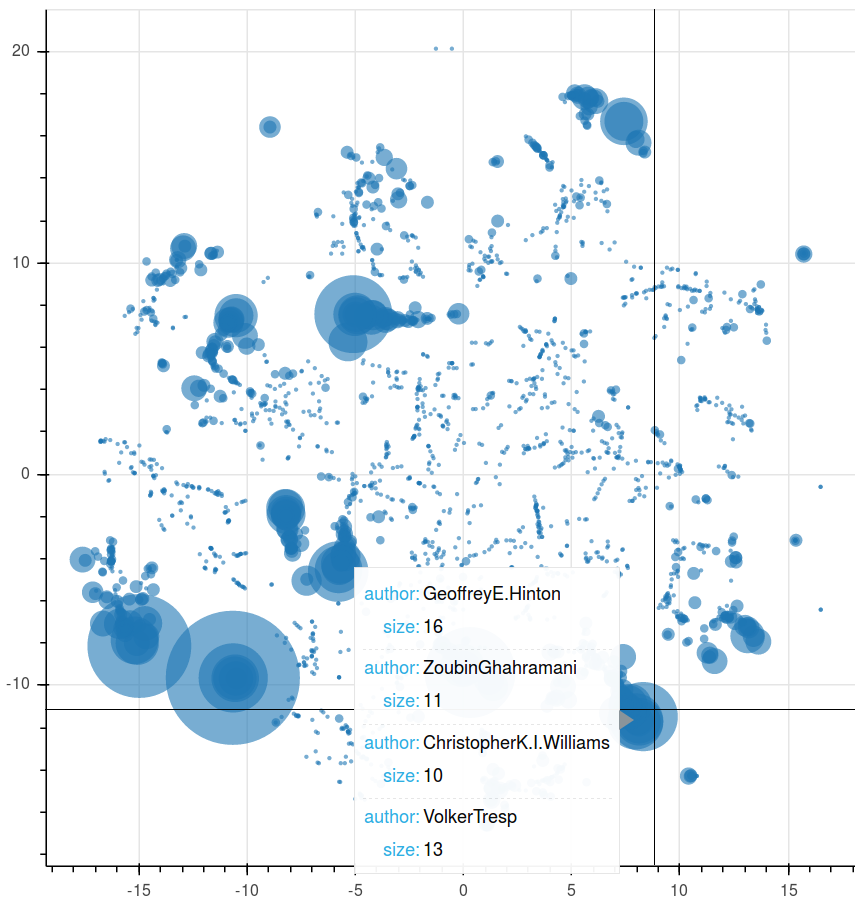

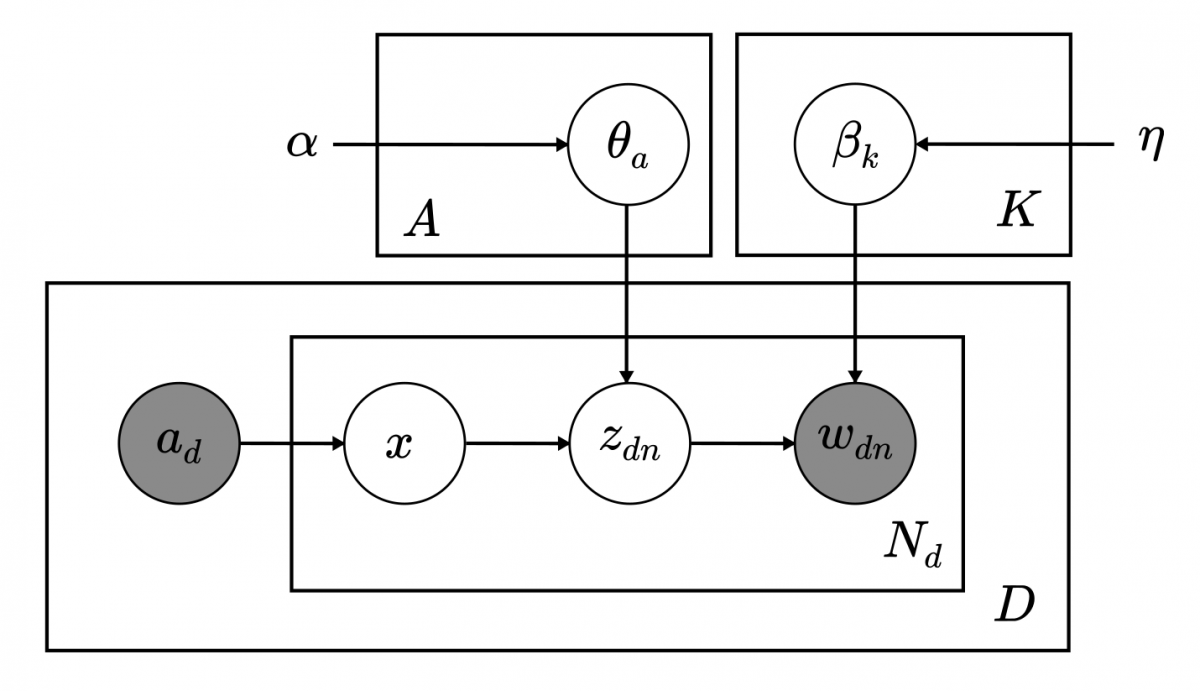

New Gensim feature: Author-topic modeling. LDA with metadata.

The author-topic model is an extension of Latent Dirichlet Allocation that allows data scientists to build topic representations of attached author labels. These author labels can represent any kind of discrete metadata attached to documents, for example, tags on posts on the web. In December of 2016, I wrote a blog post explaining that a Gensim implementation was on its …

Topic Modelling with Latent Dirichlet Allocation: How to pre-process data and tune your model. New tutorial.

If you’ve learned how to train topic models in Gensim, but aren’t able to get satisfying results, then we have a new tutorial that will help you get on the right track on GitHub. Primarily, you will learn some things about pre-processing text data for the LDA model. You will also get some tips about how to set the parameters …

Author-topic models: why I am working on a new implementation

Author-topic models promise to give data scientists a tool to simultaneously gain insight about authorship and content in terms of latent topics. The model is closely related to Latent Dirichlet Allocation (LDA). Basically, each author can be associated with multiple documents, and each document can be attributed to multiple authors. The model learns topic representations for each author, so that …

Gensim at PyCon France 2016

PyCon France 2016 was held in Rennes from the 13th-16th of October at Telecom Bretagne. Gensim had a presence on both the conference days with Bhargav Srinivasa presenting his talk on day 1 titled “Topic Modelling with Python and Gensim” and me presenting my workshop titled “Twitter User Classification with Gensim and Scikit-learn” (had a pretty boring sounding title before …

Three Sprints in India (To Say Nothing of PyCon)

I was very happy to visit India this October to run three Gensim coding sprints, give workshops and visit PyCon India conference. Many thanks to our Incubator programme student Devashish Deshpande for being my host. PyCon India Pycon India was a very friendly event of 500 attendees with workshops on Friday and conference talks over Saturday and Sunday. My favorite PyCon moment was the keynote …

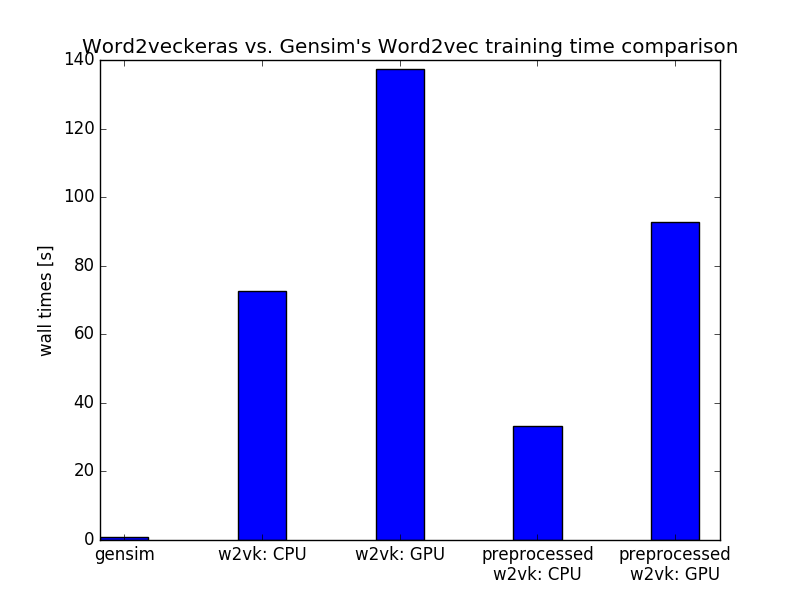

Gensim word2vec on CPU faster than Word2veckeras on GPU (Incubator Student Blog)

Word2Vec became so popular mainly thanks to huge improvements in training speed producing high-quality words vectors of much higher dimensionality compared to then widely used neural network language models. Word2Vec is an unsupervised method that can process potentially huge amounts of data without the need for manual labeling. There is really no limit to size of a dataset that can …

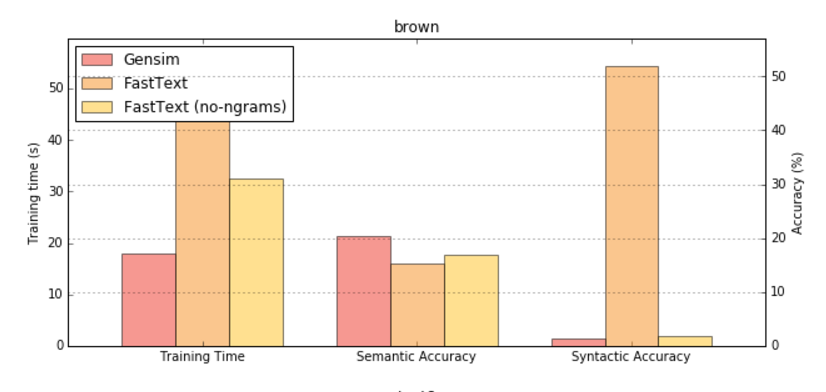

FastText and Gensim word embeddings

Facebook Research open sourced a great project recently – fastText, a fast (no surprise) and effective method to learn word representations and perform text classification. I was curious about comparing these embeddings to other commonly used embeddings, so word2vec seemed like the obvious choice, especially considering fastText embeddings are an extension of word2vec. The main goal of the Fast Text …

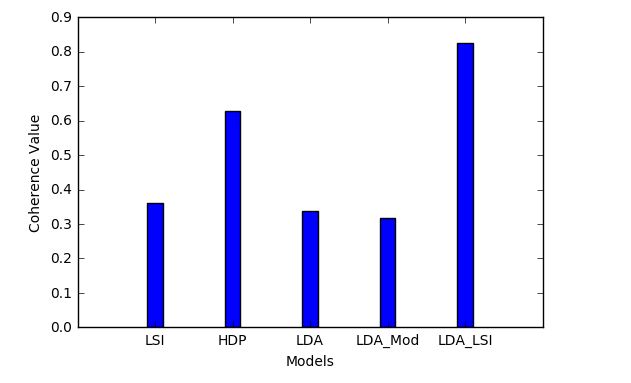

More topic coherence use-cases

Recently while doing some topic modelling, I encountered a few problems such as: How to use the topic coherence (TC) pipeline with other topic models (eg. HDP). How to find the optimal number of topics for LDA. LSI is brilliant since it ranks its topics. Can LDA do that too? If you face such problems, this blog might be able …

RARE Technologies

RARE Technologies