Translation Matrix: how to connect “embeddings” in different languages?

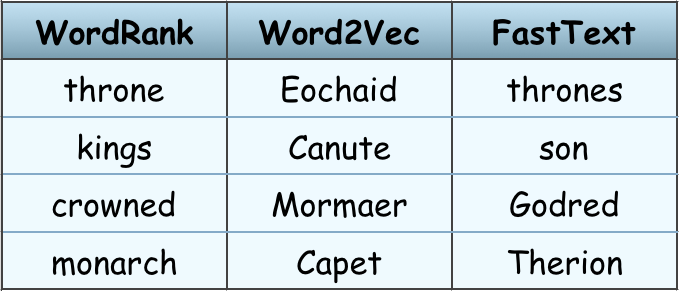

WordRank embedding: “crowned” is most similar to “king”, not word2vec’s “Canute”

Comparisons to Word2Vec and FastText with TensorBoard visualizations. With various embedding models coming up recently, it could be a difficult task to choose one. Should you simply go with the ones widely used in NLP community such as Word2Vec, or is it possible that some other model could be more accurate for your use case? There are some evaluation metrics …

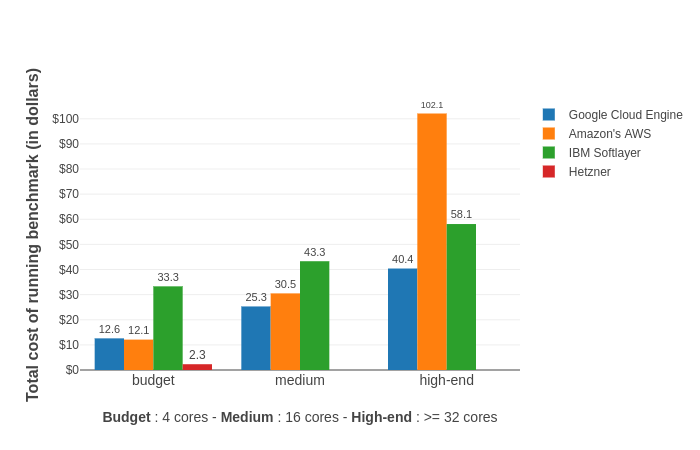

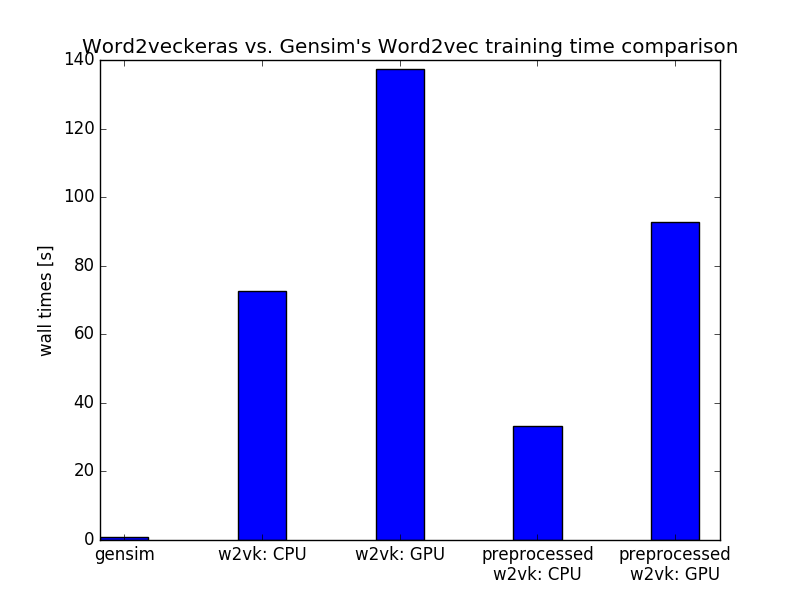

Gensim word2vec on CPU faster than Word2veckeras on GPU (Incubator Student Blog)

Word2Vec became so popular mainly thanks to huge improvements in training speed producing high-quality words vectors of much higher dimensionality compared to then widely used neural network language models. Word2Vec is an unsupervised method that can process potentially huge amounts of data without the need for manual labeling. There is really no limit to size of a dataset that can …

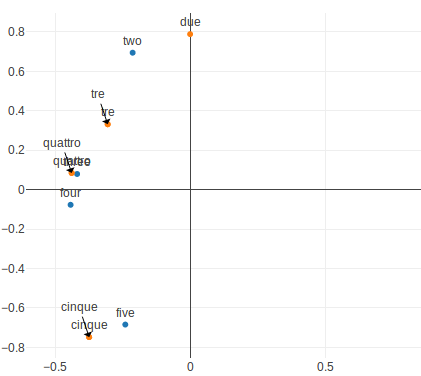

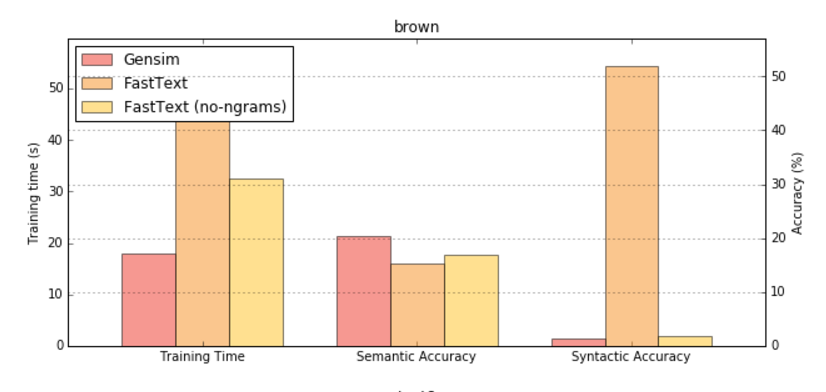

FastText and Gensim word embeddings

Facebook Research open sourced a great project recently – fastText, a fast (no surprise) and effective method to learn word representations and perform text classification. I was curious about comparing these embeddings to other commonly used embeddings, so word2vec seemed like the obvious choice, especially considering fastText embeddings are an extension of word2vec. The main goal of the Fast Text …

Does Python Stand a Chance in Today’s World of Data Science? [video]

Earlier this summer, our director Radim Řehůřek, led a talk about the state of Python in today’s world of Data Science. Covered in the talk is how businesses are using Python for commercial success, Python vs Java, and an interesting comparison of the popular latent semantic analysis (SVD) and word2vec algorithms running on with different platforms: Spark MLlib, gensim, scikit-learn …

Making sense of word2vec

One year ago, Tomáš Mikolov (together with his colleagues at Google) made some ripples by releasing word2vec, an unsupervised algorithm for learning the meaning behind words. In this blog post, I’ll evaluate some extensions that have appeared over the past year, including GloVe and matrix factorization via SVD.

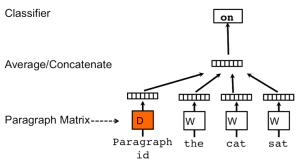

Doc2vec tutorial

The latest gensim release of 0.10.3 has a new class named Doc2Vec. All credit for this class, which is an implementation of Quoc Le & Tomáš Mikolov: “Distributed Representations of Sentences and Documents”, as well as for this tutorial, goes to the illustrious Tim Emerick. Doc2vec (aka paragraph2vec, aka sentence embeddings) modifies the word2vec algorithm to unsupervised learning of continuous …

Word2vec Tutorial

Parallelizing word2vec in Python

The final instalment on optimizing word2vec in Python: how to make use of multicore machines. You may want to read Part One and Part Two first.

- Page 1 of 2

- 1

- 2

RARE Technologies

RARE Technologies